Transformers took by storm in most fields of deep learning. While transformers reached success in the NLP community almost immediately after the Attention is All You Need paper published in 2017 and the BERT model in 2018, it took longer to shock the computer vision community. It happened just in 2020, after the DEtection TRansformers (DETR) work showed competitive results for object detection tasks. Two main new concepts are brought by DETR: 1) object queries and 2) the set prediction problem.

At the end of last year, I have defended my master’s dissertation: A Performance Increment Strategy for Semantic Segmentation of Low-Resolution Images from Damaged Roads. This year, fortunately, I have been 1st-place awarded in two contests Workshop de Visão Computacional (WVC 2023) and Workshop-Escola de Informática Teórica (WEIT 2023). My dissertation can be found here.

Recently, I had the chance to delve into transformers, specifically visual transformers, such as DETR, ViT, and Mask2Former. These studies resulted in an introductory lecture on visual transformers, collaborating with my advisor’s Computer Vision course.

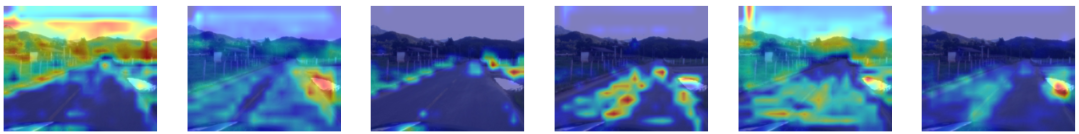

Recently, I have played a bit with CNN visualizations and interpretability. I tried the Grad-CAM approach which extends the Class Activation Mapping (CAM) work. CAM uses global average pooling followed by a dense layer with the classes' logits as the end of the neural network; the weights assigned by each neuron’s class $c$ at each unit of the global pooling layer represent the weight of the feature map channel $k$.

This post intends to show how to get on the Optimization Objective of VAE, and highlights short insights about its main concepts. Notations: \(x\): Data or Evidence. \(z\): Latent variables. \(\theta\): Decoder parameters. \(\phi\): Encoder parameters. \(q_\phi(z|x)\): Encoder probabilistic model. \(p_\theta(x|z)\): Decoder probabilistic model. \(p(z)\): Prior probability distribution of \(z\). \(z \sim q(z|x)\): the random variable \(z\) is distributed with respect to \(q(z|x)\) function. VAE The framework of VAE provides a computationally efficient way for optimizing Deep Latent Variable Model (DLVM) jointly with a corresponding inference model using Stochastic Gradient Descent (SGD).

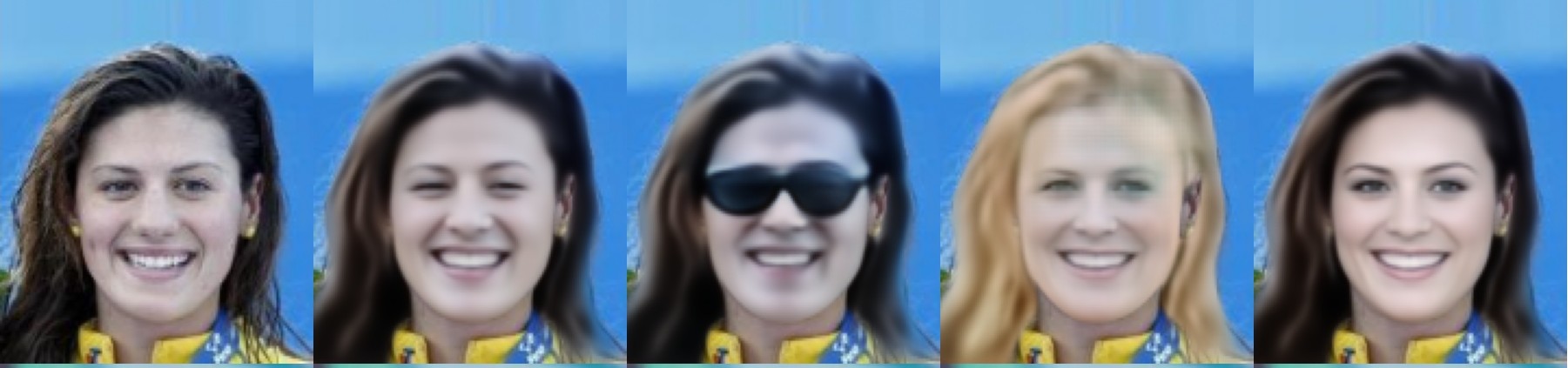

You can play with the model and face editions in Colab, or check more about the code in the GitHub repo. 1. Introduction This article shows a quick overview of a recent project that I developed working on my master’s about how to edit attributes from faces using Variational Autoencoder (VAE)[1][5]. In a practical view, VAE architecture is similar to a vanilla Autoencoder.

Introduction Bayesian optimization is usually a faster alternative than GridSearch when we’re trying to find out the best combination of hyperparameters of the algorithm. In Python, there’s a handful package that allows to apply it, the bayes_opt. This post is a code snippet to start using the package functions along xgboost to solve a regression problem. The Code Preparing the environment. import pandas as pd import numpy as np import xgboost as xgb from sklearn import datasets import bayes_opt as bopt boston = datasets.

Many times it’s not possible to have the exact distribution of a random variable (RV), so we must estimate it. There are some tatics to approach this problem. Here, I’ll share some notes from what I’ve been studying to deal with the problem: Bound inequalities and Limit Theorems. 1. Bounds using inequalities We have useful inequalities that help us to estimate the tail value of a probability distribution for a specific value \(a\) when the mean and the variance are easily computable, but we don’t have information about the distribution.

Preface This post presents concepts about how Decision Trees (DT) algorithm works. To elaborate it, I’m going to use the Iris dataset and the R language, using the dplyr grammar. library(tidyverse) library(magrittr) library(knitr) library(GGally) library(rpart) library(rattle) library(rpart.utils) iris <- iris %>% as_tibble() The Iris dataset is formed by the measurements of width and height from petals and sepals of three kinds of Iris flower. The flowers are shown bellow: